Getting WiGi with It. Performing and Programming with an Infrared Gestural Instrument

A Case Study

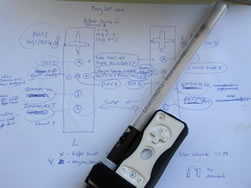

Appendix 1: WiGi Controller Implementation. Basic Routing and Functions

Appendix 2: JunXion Control Outputs in More Detail

Abstract

I offer an account of working with the Buchla Lightning MIDI controller and of the expansion of the instrument into the “WiGi” system, using Nintendo Wii-motes, JunXion and LiSa software, and made with the support and collaboration of STEIM (Studio for Electro-Instrumental Music). I will emphasise my background as an instrumentalist and consider some of its practical consequences for the project. I outline the various stages of development which have led to an expanded, fully functional performing instrument, and highlight some of the questions and decisions which occurred over a period of five years. I consider some æsthetic and other questions which arise from the process, some limitations of the system and my plans for its future development. Finally, I outline the various functions of the current instrument, the issues of mapping, scaling, buffers and illustrate my use of it.

Background

My current composition and performance practice is mainly based around a infrared MIDI controlling system developed between 2006 and 2010 in conjunction with STEIM (the Studio for Electro-Instrumental Music). The system is an augmented version of the Buchla Lightning MIDI controller — originally designed in the late 1980s by Don Buchla, extending its functionality with Nintendo Wii-mote controllers, LiSa live sampling software and JunXion input processing software.

After playing with guitars and synthesisers in rural English post-punk bands in my youth I eventually picked up the saxophone. I was initially inspired after hearing Ornette Coleman’s records, particularly Free Jazz and Of Human Feelings. A year or two later I was very directly motivated to perform on the instrument after meeting the great improvisation teacher and drummer John Stevens and hearing the remarkable multiphonic saxophone pioneer Evan Parker performing at the London Musician’s Collective. I liked the physical nature of the saxophone and its potential for new timbres and for a very energetic kind of expressive freedom. During this period I focussed pretty much religiously on blowing down this metal tube and resisted the temptations of electronics. I knew that once I got involved with electronics again that I wouldn’t be able to keep this single-minded focus on the saxophone. I started to seriously engage with electronics again while playing saxophone with the drum ‘n’ bass night Spellbound at a residency at the Band on the Wall in Manchester in 1996/97. This music (represented no doubt somewhat incomprehensibly here [YouTube]) was played over a sound system so loud and with so much sub-bass that you couldn’t actually hear anything in the normal sense of the word. It required me to play longer, louder and with a greater intensity than was ultimately physically possible. So I could no longer hold back from the riches offered by electronics and live processing: transforming the soprano into something like a guitar, a voice, the sound of a train or a flock or starlings. I started playing with a Clavia Nord Modular synthesizer, the first synthesizer I had touched for many years. But how to interact with this instrument? Or any synthesizer for that matter — for me this was not a straightforward question and it still isn’t.

The first controller that I experimented with was, naturally enough, a MIDI wind controller which on the face of it seemed as close to the saxophone as possible. But I was disappointed that the capabilities of the Yamaha WX5 fell so far below what a saxophone could do. The resistance and feedback from the physical object itself was complete non-existent and the absence of anything like overtones and other extended techniques seemed to encourage the most artificial and tepid kind of playing: sadly the WX5 feels to me more like a toy than a real instrument. The Akai EWI1000 is in many ways better and has a much more solid design and at least has real voltage control at its core, but I still can’t quite take it seriously, although I recognise it is powerful and well made instrument. So, for quite a long time I felt I didn’t really have an instrument at all anymore, just an ill-defined, if quite interesting, pile of electronic junk lacking an effective tool to interact with. What we expect from an instrument or interface is very personal, it depends where we have come from, from the instruments we have played before, what we need and what we want to hear. So although I gave up playing the saxophone I still wanted to retain some important aspects of that instrument. And somehow I still do.

So I had a fantasy to play a more developed electronic instrument which could make sounds move in space and that had the capacity to turn the computer into an instrument as flexible, fast and expressive as a saxophone. Of course this idea itself is not new, there have been many attempts. Apart from the wind controllers I’ve already mentioned; Don Buchla has developed many concepts including touch controllers, the Thunder and the Marimba Lumina; Michel Waisvisz spent some 25 years working on his Hands sensor instrument; Sal Martirano developed the SalMar; Max Mathews designed the Radio Drum and Air Drum; and today Snyderphonics Manta casts many of these older ideas in a new light, not to mention a host of other MIDI controllers and interactive instruments. Although more and more examples exist today as sensor and controller technology becomes more freely available, relatively few of these interfaces seem to ever get much past an experimental or demonstrative proof-of-concept stage towards performance capable instruments. Not everyone is really interested in a “stand-alone” instrument per se — much interface development is stimulated more by the need for a few controllers to complete specific tasks in a particular composition, or simply to demonstrate the potential of a particular technology. So I think it is useful to distinguish between interface design and instrument design. An interfacecan be a tool for a particular task or limited set of tasks, but an instrument is potentially a tool for many different kinds of task, and designing one it is a long term creative process involving making and remaking, thinking and rethinking. A Theremin, an EMS VCS3, a Synthophone or an Akai MPC3000 are great interfaces, but they are instruments too. I think the Buchla Lightning is somewhere between the two, but when I think about my whole system, the WiGi, it is definitely an instrument: it is multifaceted in operation, complex and it inevitably introduces its own qualities to the process of composition.

The Buchla Lightning

I came across this obscure and peculiar instrument cheap in a studio sale and so I picked it up without really knowing anything about it. The Lightning is a fairly fully featured MIDI controller which tracks the location of infrared light sources built into two wands — which are similar to conductors batons or drumsticks. The movements of these wands are captured within a variably sized two-dimensional (X/Y) space, normally a person-sized rectangle of around 2 metres x 1.5 metres. From this location data direction, speed and velocity are calculated and are output as MIDI values. These values can be associated with any controller numbers, note numbers or MIDI channels. The X/Y region can be subdivided into up to eight zones which can be freely associated with different continuous controllers, MIDI channels etc. At the beginning I didn’t have much strategy for all this data, for where it could go, for how it could be interpreted and how it could be derived from gesture. Lacking much experience of advanced MIDI programming, with an impenetrable manual but no other documentation, with no user group, presets, or website I have to admit it took me quite a long time to get to grips with it. But what became quickly clear is that I could plug it into a multitimbral synthesiser, wave my hands around and have 16 patches on 16 different MIDI channels responding differently to my movements and playing at the same time. After being essentially monophonic for so many years this kind of power came as shock — so much so that I think my first impetus was to put the thing back in its box and forget all about it — dimly I think I realised I was getting into something that would be much more time consuming, absorbing and intellectually complex than I could even imagine at that point.

I initially played the Buchla as part of a performing system including several other instruments. I used it mostly as percussion controller/pitch controller much like a two-dimensional spatial keyboard. Within this system there was a fairly close correspondence between gesture and audio event. For example sounds tended to be ordered left to right and up and down like a keyboard or fret board. Velocity mapping was an important part of this — amplitude, filter cut-off, attack/decay speed could all be controlled — pretty much as they are used in conventional keyboard synthesis. An example of this kind of multitimbral playing is Rings from the Twinkle3 album Let’s Make a Solar System (Ini.itu records). This is a somewhere between a contrabass solo and a chamber piece. It was played live in one take on the Buchla Lightning controlling an Akai MPC4000 sampler and Eventide Eclipse processor.

From the same album Let’s Make a Solar System1 (see virb.com) features David Ross on Tremeloa (one-stringed Hawaiian slide guitar), and hang (steel pan drum) and myself playing Buchla Lightning and analogue synthesizers. The Lightning is restricted here to the hovering orchestral (string and woodwind) sections and the more soloistic somewhere-between-a-harp-and-guitar plucked sounds. I think these tracks show that as a kind of surrogate keyboard the Lightning can handle elements like pitch and dynamics very efficiently. The feel of the instrument for me is much more alive than a keyboard and it enables a quite different style of play much more akin to that of stringed instrument. A third piece entitled Klangwelt uses percussive striking in a different way. Apart from the sequenced melodic sine pattern this piece is made solely with the Lightning controlling various heavily processed metallic layers of plucking sounds from a Clavia Nord G2 modular synthesizer.

In this period I also experimented with mapping controllers to percussive patches on an Akai sampler which gave me a set of percussive and keyboard-like instruments which could be stacked, creating the possibility for example of a single strike engaging three different instruments. These instruments could all be modulated separately, creating dense, fast moving percussive layers, which I explored in a 2005 performance with saxophonist Evan Parker. 2[2. Evan Parker (soprano saxophone) and Richard Scott (Buchla Lightning) duet recorded in concert with Grutronic. Lancaster Jazz Festival, 15 September 2005. Available on virb.com.]

One of the less conventional features of the Buchla is the option to map a continuous controller to MIDI note numbers which gave the possibility of outputting pitches at quite unrealistic Nancarrow-like speeds. When mapped to the sampler this enables hundreds of samples to be fired off in a dense stream within the space of a few seconds, musically close to useless perhaps, but quite a terrifying weapon to have at one’s disposal in case of trouble…

Michel Waisvisz, STEIM and LiSa

On Evan Parker’s suggestion I eventually found my way to STEIM, where I met Michel Waisvisz, Robert van Heuman and Frank Balde and was introduced to the LiSa software. LiSa introduced a non-linearity to the system which was confusing to me at first but turned out to be a crucial development. The basic idea is simple enough: a continuous buffer of samples are held in RAM which the software can address in a multiplicity of different ways. One image for this is that the sound is like a piece of tape which can be played back simultaneously at different speeds and directions and in different length loops with an almost infinite number of tape heads. Added to this are LiSa’s limited but interesting processing and re-synthesising tools. This image of a strip of tape is ultimately far more interesting to me than a keyboard-like or a triggered percussive model. In essence I conceive of it as basically monophonic; a single moment of time which I can pull about through space, giving it shape as I do — although it doesn’t necessarily sound anything like a saxophone I think this part of my thinking is still very saxophonic.

Much of the work at STEIM over the years, at least under Michel Waisvisz’ directorship, came from his intuitive sense that electronic sounds should be connected with physical touch and movement. His legendary Cracklebox design, which uses a capacitive surface in such a way that the body becomes a part of the circuit, was a result of this intuition. From the earliest days of commercially available synthesisers he felt dissatisfied with what the musical instrument industry had to offer. Michel told me that for example that he had never liked the celebrated Moog Minimoog; he didn’t like the keyboard or the concept or the sound, which he described as “too rich”, and “too much like a musical instrument.” My memory of this conversation is not perfect, but I think he may have said “too complete” too, which even if I remembered it incorrectly is an interesting idea. The EMS VCS3 Synthi was more to his taste but it was only when he discovered that the Cracklebox principle could be applied to it by hacking and abusing the preset card port that he developed a lasting relationship with that instrument. I think STEIM was never just about technology — it is also very much about this intuition that sound and body are inherently connected and that this needs to be expressed through the technology, not in spite of it. For Waisvisz the “instrument” itself was never really finished, and its ongoing definition became a definitive component of his work. It is no coincidence that he had a “problem” with recording, his work was so much based on the circumstantial, the condition and the unfinished that the fixed media form of recordings really didn’t interest him that much.

So, I found myself developing a LiSa patch in Studio One at STEIM and was surprised to learn that this was the same studio where Don Buchla had finished the development of the Buchla Lightning, I think in 1986. This is worth commenting on because I believe this sense of continuity and connectedness with technological history is quite rare and is probably a part of the unique appeal of working at STEIM. So many people worked there on so many projects over such a long time and much of this expertise is available nowhere else. For one thing this helps to remind us that developing an instrument can be a collective, interactive process, and this is a very useful thing: to not always be alone. Also, what is available to the player is entirety dependent on prior decisions made during programming — and these decisions are made far from the heat of the moment. It is terribly difficult to be the performer and the designer at the same time and some temporary separation of left and right brain activity can be a very useful thing, especially as composers are not necessarily the best programmers, and STEIM’s support can certainly help to enable this separation: to separate the playing and the planning. And to ask questions that you don’t realise are important…

To many, LiSa may well seem an archaic and obsolete software, and they would not be completely wrong. LiSa has an unusual interface, it is restricted to MIDI resolution (no OSC), it has very limited processing and synthesis capacities. It has some quite esoteric features, it can’t dynamically modulate envelopes and it can’t host audio units or any other plug-ins. By the standards of today’s slick and gleaming software packages it seems pretty quirky in concept and quite limited in function. But for dynamic sample manipulation and in particular resampling and live sampling it remains one of the best solutions out there. Sonically LiSa seems well suited to its task too, so until something better comes along I will stay with LiSa. But software is software, compared with the physical beauty of a vintage Selmer saxophone I am pretty much equally unmoved by all of it, so there is no reason to get too hung up on one over the other, so Ableton Live as well as SuperCollider and Reaktor could well have a role in a future system. In fact, I am already using Max/MSP for advanced granular synthesis in both my studio and performance systems.

Wii-motes and JunXion

If LiSa and STEIM showed me the potential of the Lightning becoming a new kind of instrument, far beyond the capacity of a conventional MIDI controller, they also highlight its weaknesses. The Buchla has no onboard capacity for scaling or editing MIDI and it has only one assignable button per wand. In itself I do not think it is a fully fledged system: it requires additional MIDI controllers, especially buttons, and an extension of its architecture in order to be used in a complex and dynamic way. In order to get better control of LiSa I added faders and buttons in the form of a Faderfox LV2 controller. This gave me the capacity to change modes, to change settings and to change buffers on the fly. Well, more or less on the fly. For a while I used a one-handed system, holding a Buchla wand in my right hand and changing parameters on LiSa and whatever other hardware I was using with my left. This was actually surprisingly flexible and it is the system with which most of Grutronic’s Essex Foam Party CD (PSI Records) was recorded, but it was only ever a compromise. I was just setting out some plans to design and build an augmented wireless handset when the Nintendo Wii-mote controllers appeared on the market. The timing could hardly have been better: wireless, small and cheap and using many of the principles of the Buchla Lightning. But the complexities it enabled made another piece of software suddenly essential, STEIM’s JunXion MIDI routing and processing package.

With Frank Balde’s invaluable programming assistance JunXion enabled me to merge the outputs of the Buchla and Wii-motes, to do whatever MIDI or OSC data processing and scaling I need and then to send these controllers to LiSa and whatever other software and hardware I am using. With JunXion’s timers we were also able to multiply the functions of many of the Wii-motes buttons, introduce differentially timed buttons, shift keys, a shifted mode, and so on, thus introducing the possibility of something like a full range of MIDI/OSC controllers to the system. See Appendix 1 for a diagramme of the WiGi Controller Implementation.

The WiGi in Action

My performances with this instrument are highly improvisational. In performance I typically have several buffers of sound materials available. I can select one of these bodies of material to play, changing the start and end point of the loop within a 2-1/2 minute sample buffer, manipulating pitch, amplitude, filter frequency and filter cut-off. In my current system I have six different types of play available with options for different tempos, forwards and reverse play, and LiSa’s rough and ready granular modes: all of these are controlled by the Wii buttons and accelerometers and the positions of the two wands in space. The system also has velocity responsive enveloped zones distributed at the centre and edges of the XY region which I can play percussively by striking within the demarcated zone, most of these are subject to a controlled degree of randomisation. Whereas the control of pitch from the continuous controllers is linear and very exact this aspect of the system is purposefully semi-linear and it is almost impossible to accurately perform a melody on it, instead gives general control over pitch and a very accurate control of velocity and amplitude.

In LiSa I can access 20 or more sound buffers during a performance and I can change them very quickly, halfway through a phrase if need be. They are organised in presets and I can have as many of those as I need so a vast amount of material is potentially available: ultimately the only limit is the computer’s hard drive size. I have some favourite buffers than I use all the time and I have specific buffers constructed for particular purposes, e.g. buffers made from extended saxophone technique, string quartet and also buffers made from samples of particular players, for example shakuhachi player Clive Bell, percussionist Gustavo Aguilar, bassist Adam Linson… I would like to develop this aspect more: developing specific sound materials for particular people I am performing with. I’d like to get more into the specificity of the sounds, also perhaps finding more systematic ways to build buffers.

In performance I am almost entirely dependent on these buffers. They marks the limits of my universe, they determine the material is possible for me to access and how it is distributed in the virtual spaces of the computer’s memory and the actual space of the X-axis stretching out two metres in front of me. Yet, somehow, everything about making buffers makes me nervous. I can’t really explain why but I still have no idea what makes a good buffer, it seems to defy any kind of rationality. Sometimes I spend days on them and they are useless, and sometimes I put one together in a few minutes because I have a concert the next day and I am still using them years later. The majority of my improvising over the past two years has come from the same four 2-1/2 minute buffers. This mere ten minutes of material has formed the basic material from which many compositions and performances have been derived. A “good” buffer is already a thing of beauty, it should probably be an interesting thing to listen to on its own as a fixed media piece and as a composition in its own right.

The WiGi has in the last year or two been featured in many performances in Amsterdam, Berlin 3[3. A short but spirited performance featuring the WiGi and vocalist Rebekka Uhlig was performed in last year’s Interaktion Festival, Berlin 3 Janury 2009. Video available on blip.tv (3:30).], Hamburg and Bratislava, in numerous solos and duets, with the group Grutronic, percussionists Gustavo Aguilar, Phillip Marks and Michael Vorfeld, trombonist Hilary Jeffrey, guitarist Olaf Rupp and saxophonist Evan Parker amongst others. It has also featured in my acousmatic and interactive compositions premiered at the MANTIS Electroacoustic Music Festival in Manchester, and on recent CD releases by Grutronic (Essex Foam Party, psi 09.07) and Twinkle3 (Let’s Make A Solar System, Ini.itu #0901, March 2009)

The WiGi in more Detail

With the addition of LiSa, the Wii-motes and JunXion to the Buchla Lightning I have the feeling I have created a new instrument from Buchla’s inspiring original template which should have a new name. So I call this augmented system the Wireless Gestural Instrument: WiGi. Its development also coincided with the original Lightning finally failing for good and its replacement with a Lightning II. This version is a little more robust and has an onboard multitimbral GM synthesizer. These limited sounds are actually quite good quality and enable conventional synthesizer patches to be controlled by the Lightning very conveniently. However when the onboard synthesizer on mine failed I wasn’t too sad about it as my interest was by now far away from that conventional MIDI sound world. Apart from the basic sensor mapping I am hardly using the Lightning’s onboard software features at all nowadays, the bulk of this work being mostly done within JunXion.

One significant WiGi feature which I have developed with Frank Balde at STEIM in the last year has been the use of quantization. In the Buchla Lightning’s own internal software different regions can be already be associated with different controller numbers, they can be output to different MIDI channels and associated with different regions of a buffer or attached to different processing behaviours. Expanding on this idea within the framework of JunXion and LiSa Frank and I programmed various tables to create possibilities to step rather than scroll through specific regions, creating discrete sets, or “scales.” This discrete approach allows, for example, pitch bends to be quantized into microtonal scales and it allows jumping rather than scrolling through data values. Indeed, it allows any controller data to be broken down into any amount of discrete steps in any number of ways and used for many purposes. The start time of the addressed region can always be changed, so although we may be, for example, addressing a reduced group of 8 values (reduced from MIDI’s 127 possible values) the location of those 8 values can be moved dynamically. These quantizations are also switchable so the possibility of having full 127 value MIDI resolution is always still available and any controller can be changed between its continuous and discrete states on the fly. In practice it is of course much easier to conceive of a line in space being divided into 8 sections than into 127 sections and it is also far easier to intuitively grasp individual points along the line and to commit these to muscle memory. This application of quantization gives the possibility of greater accuracy and repeatability, especially when working rhythmically. It took me years of working with the instrument to realise the necessity for this kind of flexibility and this relatively minor change has thus greatly expanded the accuracy and practical functionality of the instrument.

Generally speaking I would say that once the basic physical form and software functionality of an electronic instrumental system is in place, these issues of mapping and scaling become the major focus and as there are near infinite potentials for detail and complexity there the process of programming, testing, reflecting and reprogramming can be pretty much open-ended.

A second recent development is our implementation of a variety of timers and tables in JunXion functioning more or less as sequencers or sample and hold/looping devices. This gives me the possibility to work with patterns and rhythmic structures in a way I haven’t been able to before. Added to this is the ability to use longer sustained/held notes and patterns, creating new possibilities to make drones and more “orchestral” layered material. The introduction of sustained long loops loosens me from the percussive keyboard player role even further and frees me to work with other aspects, for example diffusion, arrangement, building multiple layers, and changing the contents of buffers.

With these additions the WiGi has become a much more rounded instrument, in fact it is a collection of instruments (see Appendix 2: JunXion Control Outputs in More Detail). In different modes and with different gestures and controllers I can now work in a variety of different ways with a greatly expanded palette of textures and layers. In addition to pianistic and percussive playing, it is now capable of generating individual melodic lines, rhythmic pulses, harmonic patterns and “orchestral” layering, and performing many of these functions simultaneously. An example of these kind of more complex application can be heard in the group piece Foam Sweet Foam (see virb.com) from the Grutronic CD Essex Foam Party (PSI Records). Here the drum machine and analogue synthesizer sounds are being controlled by the WiGi/JunXion, forming a complex and almost soloistic rhythmic line. Another example is my acousmatic composition, Ghosts of the Gamecock (see virb.com), based on percussion sounds, bird calls and Rkang gling (a Tibetan human femur trumpet). These instruments are presented in natural/acoustic and in transformed versions. The same sounds are also employed as sampled source material being controlled by the WiGi — particularly in the last 90 seconds of the piece. The composition also features an analogue modular synthesizer modulated by voltages mapped from the acoustic sampled material.

For me a much greater musical freedom has come from the non-linearity of LiSa and the complex mapping and scaling possibilities of JunXion. But for the audience I have a feeling that something has also been lost in this process. I think there are many moments in my performances where the link between gesture and sonic outcome are very clear but there are also stretches where I am an engaged, for example, with moving around the buffer, loading new buffers, resampling, processing, triggering sequences where the gestural activity is not transparently linked. If I can pull a piece of sound out of the air whose timbre, pitch and amplitude are not necessarily any longer visibly related to my physical gestures then the readability and connection between what is seen and what is heard is clearly disrupted. Tiny, almost invisible movements, especially buffer changes, can have enormous consequences. Conversely, large percussive gestures may result in surprisingly minimal sonic activity. It is also possible that a strong spatial movement in the original sonic material may not be reflected in the movement of the wands; so that a sound may well travel in a different direction in real, physical space than it does in my gestural performance space: there are times when a clear left to right movement of the wands may lead to a positive right to left movement of the sound in space. For the audience, this can be quite confusing and from my informal discussions with audience members I can say that some feel more engaged and more curious when they can sense the clear relationship between movement and sound than when they can’t. To me as composer I am of course far more concerned with feeling the connection between my movements and sounds than in what I look like; I am feeling and listening but not really looking. But when I am onstage performing my movements are of course an integral part of what the audience are witnessing and any disjunction between the two may ultimately weaken the performance. I think there is something undeniably charming about the Theremin, it is so self-contained and transparent: when playing the body is open and exposed to a degree that the eye hardly sees that there is an instrument there. I think this idea was one of my early motivations too, but the WiGi is quite far from this today. Whether this is an issue of real concern, and whether the WiGi is by nature a performance instrument whose functioning should have some kind of readability for the audience are interesting questions. And they are questions that I haven’t answered yet.

The work goes on: during my next period of residency at STEIM we will refine the design further, continuing to develop the JunXion programming. We will also try to hack a Nintendo Nunchuck board and mount it inside the Wii-mote to attempt to gain more, much needed, buttons and an analogue controller too. I will experiment with a Nintendo Wii-fit board and Yamaha breath controller as well to see if these might be useful expansions to the system. After this process is complete I think I will be able to finally say that the WiGi in its current form is completed. Next year, finances permitting and with the continuing support of STEIM, I plan to design and build WiGi II from scratch, less reliant on the Buchla and Nintendo technologies and more specifically designed from the ground up to be a musical instrument interface. This instrument should integrate everything I have learned from the Buchla Lightning and WiGi, it should have many more independent controllers than the current system, it should be more self-contained and, I sincerely hope, it will prove considerably more ergonomic in use. However useful the Wii-motes are to me, they are ultimately gaming toys and are not designed to be musical instruments or to fully utilise the potential of the human hand, as my poor aching thumbs will attest!

Issues Arising from the WiGi Project

Developing this kind of system involves a great deal of patience, pragmatism and an empirical attitude. It is real science in fact, every proposition has to be tested. And you can’t do that in abstraction, it has to be informed by experience. Strategy is a process, not a stage preceding action. So the conceptual development of ideas needs to be constantly tested against the reality, or as the military theorist Clausewitz has it, the friction, of testing through playing. The questions about what is possible, what is necessary and what is desirable need to be asked throughout the process. I think it is important to ask to what your instrumental models are — how much you are carrying with you from other instruments — to help clearly define not just what the instrument is but what you actually want to do with it. I also think we need not only to be able to develop the technologies to sense and capture the musical potential of the body, but to understand how this desire relates to what we as composers and listeners need and expect to hear in our music. There are always scientific and technical questions to contend with, but I think there should always be æsthetic, artistic questions at the heart of what we are doing. The question of interface is not simply a question of technology and potential function but of a fully realised function, of æsthetics and of creativity. Questions regarding interface design cannot be usefully considered separately from the problems of composition and the theoretical issues of what it is we want our music to be or what we want it to do.

I think this also relates to one of the earlier distinctions I made between an interface and an instrument and I’d like to return to that here. One important aspect that I think separates an instrument from an interface is that an instrument inevitably involves controlling multiple aspects simultaneously. That is the very nature of a musical instrument. An interface may usefully be able to manipulate timbre, amplitude and pitch wholly independently of each other. That is an ideal for an instrumentalist too, but the reality of a musical instrument is somewhat different; there is always some unevenness and instability, some crosstalk. For example on woodwind instruments amplitude and pitch are always somewhat approximate and they always influence both each other and the harmonic spectrum. The WiGi is no different, it is always producing a surfeit of information and part of the necessary playing technique is to contain this information within useful limits and to be able to control amplitude and pitch relatively but not absolutely independently of timbre. The need for this kind of control means that a means of practise and of developing and extending technique becomes necessary — in just the same way as for any other instrument. Well, not exactly the same, there are no teachers or books on Buchla Lightning and Wii-mote technique, no grades or levels to be achieved and of course, what kind of techniques and levels of mastery you need to develop depends very much on what it is one wants to achieve.

So, solo practise is of course necessary. Music needs to be faster than thought — at least I believe improvised music does — and these objects (both hardware and software) need fixing in the mind and body in much the same manner as an acoustic instrument.

From the beginning the WiGi was intended to be a socially interactive tool, and for me this is perhaps still the most interesting aspect. Composition is very important to me, but the real inspiration for the ongoing development of the WiGi comes from the other musicians I work with; hearing a tone or possibility onstage and wanting to react but not knowing how to; finding my instrument too slow or too cumbersome so by the time I can react the moment has passed. Its not just about expressing my sound world, its about how I can respond to theirs too. For example, I played a concert with contrabassist Klaus Janek and trombonist Hilary Jeffrey in Berlin a few months ago and I realised that almost their entire sound world was legato and mine was overwhelmingly percussive. It was not always easy to meet them and this led me to some new areas and new questions, not just in that concert, but in the programming afterwards. So, in order to develop the instrument further I definitely need to test the ongoing design and programming with other musicians in actual playing situations.

So, my instrument is not the result of me working alone in a studio; many people have been involved and more will be involved in the future. Music is social, and that is perhaps becoming a lost meaning in this era. Of course it is still beautiful to craft a fixed media piece in the solitude of the studio and a complete historical privilege to have that space and time to work with. But we shouldn’t forget that music is something that can physically and psychically connect people together, it has a social and spiritual meanings too — its roles as art, as entertainment and as distraction are relatively recent. For me the continuing development of new instruments and new interfaces, which I do think is a vital area of research, not only remembers space, time, gesture and the body — all of which electroacoustic music has long been in danger of forgetting — but, perhaps surprisingly, it also reminds us of some of the very basic human and collective meanings of music-making.

Social top